2022 Federal Index

Use of Evidence in Competitive Grant Programs

Did the agency use evidence of effectiveness when allocating funds from its competitive grant programs in FY22 (examples: tiered-evidence frameworks, evidence-based funding set-asides, priority preference points or other preference scoring for evidence, and pay for success provisions)?

Score

15

15

Millennium Challenge Corporation

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- MCC awards all of its agency funds through two competitive grants:

8.2 Did the agency use evidence of effectiveness to allocate funds in its five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- For country partner selection, as part of the compact and threshold competitive programs, MCC uses twenty different indicators within the categories of economic freedom, investing in people, and ruling justly to determine country eligibility for program assistance. These objective indicators of a country’s performance are collected by independent third parties. The corporation regularly assesses the scorecard to ensure that indicators use the best possible data. When third party entities publish new or better data, MCC updates the scorecard to ensure that it captures comprehensive policy performance.

- When considering granting a second compact, MCC further considers whether countries have (1) exhibited successful performance on their previous compact, (2) improved scorecard performance during the partnership, and (3) exhibited a continued commitment to further their sector reform efforts in any subsequent partnership. As a result, the MCC Board of Directors has an even higher standard when selecting countries for subsequent compacts. According to MCC’s policy for Compact Development Guidance (p. 6): “As the results of impact evaluations and other assessments of the previous compact program become available, the partner country must use these data to inform project proposal assessment, project design, and implementation approaches.”

8.3 Did the agency use its five largest competitive grant programs to build evidence (e.g., requiring grantees to participate in evaluations)?

- According to its Policy for M&E, MCC requires independent evaluations of every project to assess progress in achieving outputs and outcomes and program learning based on defined evaluation questions throughout the lifetime of the project and beyond. As described above, MCC publicly releases all these evaluations on its MCC Evidence Platform and uses findings, in collaboration with stakeholders and partner countries, to build evidence in the field so that policymakers in the United States and in partner countries can leverage MCC’s experiences to develop future programming. In line with MCC’s Policy for M&E, MCC projects are required to submit quarterly indicator tracking tables showing progress toward projected targets.

8.4 Did the agency use evidence of effectiveness to allocate funds to any other competitive grant programs (besides its five largest grant programs)?

- Millennium Challenge Corporation uses evidence of effectiveness to allocate funds in all its competitive grant programs as noted above.

8. 5 What are the agency’s one or two strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- Millennium Challenge Corporation’s $358,000,000 Lesotho Compact funded a $17,900,000 land administration reform activity within the Private Sector Development Project. The activity supported land policy and regulatory reform, streamlined land administration procedures, created the Land Administration Authority, conducted public outreach, and supported systematic land regularization. The activity was based on the theory that strengthened tenure and efficient land administration would reduce land conflict, drive formal land transactions, and increase investment and use of land as collateral, which would contribute to private sector development, especially for women.

- An independent evaluation of the investment found that:

- Women’s tenure improved with a 55 percentage point increase in women’s sole or joint ownership of newly registered parcels.

- Systematic land regularization decreased land conflict concerns by 5% points for female-headed households but had no effect on the fear of losing land.

- The adoption of the 2010 Land Act and establishment of the Land Administration Authority were associated with significant reductions in transaction time. The average time to register a land transfer and mortgage fell by more than 67% and 80%, respectively.

- The activity catalyzed land transfers from 165 a year in 2010 to 1,075 a year in 2019.

- Policy and institutional reforms activated credit markets by more than doubling mortgages and increasing the share of mortgages issued jointly or in the name of women.

- Systematic land regularization in informal settlements did not result in land investment.

- The corporation is applying the evidence from these outcomes to a subsequent investment in Lesotho as well as to other land sector projects around the world.

8.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity building efforts?

- As described above, MCC develops an M&E plan for every grantee, describing the independent evaluations that will be conducted, the key evaluation questions and methodologies, and the data collection strategies that will be employed. As such, grantees use program funds for evaluation.

- MCC’s Policy for Monitoring and Evaluation stipulates that the “primary responsibility for developing the M&E Plan lies with the MCA [grantee] M&E Director with support and input from MCC’s M&E Lead and Economist. MCC and MCA Project/Activity Leads are expected to guide the selection of the indicators at the process and output levels that are particularly useful for management and oversight of activities and projects.” The M&E policy is intended primarily to guide MCC and partner country staff decisions to utilize M&E effectively throughout the entire program life cycle in order to improve outcomes. All MCC investments also include M&E capacity building for grantees.

Score

15

15

U.S. Department of Education

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- ED’s top five program accounts based on actual appropriation amounts in FY22 are:

- TRIO ($1.1 billion; eligible applicants: eligible grantees: institutions of higher education, public and private organizations);

- Charter Schools Program ($440 million; eligible grantees: varies by program, including state entities, charter management organizations, public and private entities, and local charter schools)

- GEAR UP ($378 million; eligible grantees: state agencies; partnerships that include IHEs and LEAs)

- Comprehensive Literacy Development Grants ($192 million; eligible grantees: state education agencies).

- Teacher and School Leader Incentive Program (TSL) ($173 million; eligible grantees: local education agencies, partnerships between state and local education agencies; and partnerships between nonprofit organizations and local educational agencies);

8.2 Did the agency use evidence of effectiveness to allocate funds in its five largest competitive grant programs? (e.g., Were evidence-based interventions/practices required or suggested? Was evidence a significant requirement?)

- ED uses evidence of effectiveness when making awards in its largest competitive grant programs.

- Each of the FY 2022 competitions for new awards under the Upward Bound, Upward Bound Math and Science, Veterans Upward Bound, and McNair Postbaccalaureate Achievement Program T included a competitive preference priority for projects that proposed strategies supported by evidence that demonstrates a rationale. These competitions provide points for applicants that propose a project with a key component in its logic model that is informed by research or evaluation findings that suggest it is likely to improve relevant outcomes. FY 2022 funding also supported continuation awards to grantees that were successful in prior competitions that encouraged applicants to provide evidence that demonstrates a rationale (through competitive preference priorities in the FY 2021 Talent Search and Educational Opportunity Centers competitions and through a selection factor in the FY 2020 Student Support Services competition).

- Under the Charter Schools Program–and in several others agency-wide–ED generally requires or encourages applicants to support their project through logic models as well as other requirements and selection criteria to support the use of evidence-based practices and demonstrate effectiveness. In the FY22 competitions for Developers (Grants for the Opening of New Charter Schools – ALN 84.282B and Grants for the Replication and Expansion of High-Quality Charter Schools – ALN 84.282E) and for State Entities (ALN 84.282A), the Department included a selection criteria to provide points to applicants based on the extent to which the project demonstrates a rationale, meaning that a key project component included in the project’s logic model is informed by research or evaluation findings that suggest the project component is likely to improve relevant outcomes. In the Developer competitions, the Department required all applicants to provide a logic model and within the Replication and Expansion program (84.282E), ED also included a selection criteria related to the quality of the applicant and the extent to which they have a prior track record of success. A portion of the FY22 funding for the CSP was also used to fund additional unfunded applicants from the FY21 Credit Enhancement competition, which provided points to applicants based on the extent to which the project focused on assisting charter schools with a likelihood of success and the greatest demonstrated need for assistance under the program. Finally, a portion of FY22 funds were also dedicated to continuation awards to grantees who competed successfully in prior competitions, including those for the 2020 Charter Management Organization cohort, which also required a logic model and evidence of a track record of success

- The Department used a portion of the FY 2022 funding for new awards to high-scoring unfunded applicants from the FY 2021 competitions, which included competitive preference priorities for strategies based on moderate evidence (State competition) and Promising evidence (Partnership competition). In addition, the majority of FY 2022 funding supported continuation awards to States and Partnerships that competed successfully in previous competitions, including the FY 2021 competitions, the FY 2019 State competition (which included a competitive preference priority for strategies based on Promising evidence), the FY 2018 competitions (which included a selection factor for strategies that demonstrate a rationale), and the FY 2017 competitions (which included a CPP for strategies based on moderate evidence of effectiveness).

- The TSL statute requires applicants to provide a description of the rationale for their project and describe how the proposed activities are evidence-based, and grantees are held to these standards in the implementation of the program.

- The Comprehensive Literacy Development (CLD) statute requires that grantees provide subgrants to local educational agencies that conduct evidence-based literacy interventions. ESSA requires ED to give priority to applicants that meet the higher evidence levels of strong or moderate evidence, and in cases where there may not be significant evidence-based literacy strategies or interventions available, for example in early childhood education, encourage applicants to demonstrate a rationale.

8.3 Did the agency use its five largest competitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- The Evidence Leadership Group (ELG) advises program offices on ways to incorporate evidence in grant programs through encouraging or requiring applicants to propose projects that are based on research and by encouraging applicants to design evaluations for their proposed projects that would build new evidence.

- ED’s grant programs require some form of an evaluation report on a yearly basis to build evidence, demonstrate performance improvement, and account for the utilization of funds. For examples, please see the annual performance reports of TRIO, the Charter Schools Program, and GEAR UP. The Teacher and School Leader Incentive Program is required by ESSA to conduct a national evaluation. The Comprehensive Literacy Development Grant requires evaluation reports. In addition, IES is currently conducting rigorous evaluations to identify successful practices in TRIO-Educational Opportunities Centers and GEAR UP. In FY19, IES released a rigorous evaluation of practices embedded within TRIO-Upward Bound that examined the impact of enhanced college advising practices on students’ pathway to college.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant programs (besides its five largest grant programs)?

- The Education Innovation and Research (EIR) program supports the creation, development, implementation, replication, and taking to scale of entrepreneurial, evidence-based, field-initiated innovations designed to improve student achievement and attainment for high-need students. The program uses three evidence tiers to allocate funds based on evidence of effectiveness, with larger awards given to applicants who can demonstrate stronger levels of prior evidence and produce stronger evidence of effectiveness through a rigorous, independent evaluation. The FY21 competition included checklists, a webinar, and PowerPoints to help applicants clearly understand the evidence requirements.

- ED incorporates the evidence standards established in EDGAR as priorities and selection criteria in many competitive grant programs. In addition, the Secretary’s Supplemental Priorities that can be used in the Department’s grant programs advance areas supported by evidence, and include evidence-based approaches in various ways.

8.5 What are the agency’s 1-2 strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- The EIR program supports the creation, development, implementation, replication, and scaling up of evidence-based, field-initiated innovations designed to improve student achievement and attainment for high-need students. IES released The Investing in Innovation Fund: Summary of 67 Evaluations, which can be used to inform efforts to move to more effective practices. An update to that report, capturing more recent grants, is currently being developed. ED continues to explore the results of these studies, and other feedback received from grantees and the public, to determine how lessons from EIR can strengthen both that and other ED programs.

8.6 Did the agency provide guidance which makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity-building efforts?

- In 2016, ED released non-regulatory guidance to provide state educational agencies, local educational agencies (LEAs), schools, educators, and partner organizations with information to assist them in selecting and using “evidence-based” activities, strategies, and interventions, as defined by ESSA, including carrying out evaluations to “examine and reflect” on how interventions are working. However, the guidance does not specify that federal competitive funds can be used to conduct such evaluations. Frequently, though, programs do include a requirement to evaluate the grant during and after the project period.

Score

10

10

U.S. Agency for International Development

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- USAID’s top five program accounts based on actual appropriation amounts in FY22 are:

- International Disaster Assistance: $4,400,000,000; eligible grantees: any U.S. or non-U.S. organization, individual, nonprofit, or for-profit entity that meets the requirements described in ADS 303;

- Migration and Refugee Assistance: $3,430,000,000; eligible grantees: any U.S. or non-U.S. organization, individual, nonprofit, or for-profit entity that meets the requirements described in ADS 303;

- Development Assistance: $3,500,000,000; eligible grantees: any U.S. or non-U.S. organization, individual, nonprofit, or for-profit entity that meets the requirements described in ADS 303;

- Global Health (USAID): $3,200,000,000; eligible grantees: any U.S. or non-U.S. organization, individual, nonprofit, or for-profit entity that meets the requirements described in ADS 303;

- Economic Support Fund: $3,080,000,000 (ADS 303).

- The U.S. Foreign Assistance Reference Guide provides more information on each of these accounts. More information can also be found in the FY22 Congressional Budget Justification. When awarding grants and cooperative agreements, USAID generally does not limit eligibility; eligibility may be restricted for an individual notice of funding opportunity in accordance with the procedures in ADS 303.

8.2 Did the agency use evidence of effectiveness to allocate funds in its five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- The agency is committed to using evidence of effectiveness in all of its competitive contracts, cooperative agreements, and grants, which comprise the majority of its work. The USAID Program Cycle Policy ensures that evidence from monitoring, evaluation, and other sources informs funding decisions at all levels, including during strategic planning, project and activity design, procurement, and implementation.

- The agency’s Senior Obligation Alignment Review helps to ensure that the agency is using evidence to design and approve funding for innovative approaches to provide long-term sustainable outcomes and provides oversight on the use of grant or contract mechanisms and proposed results.

- The agency includes past performance to comprise 30% of the non-cost evaluation criteria for contracts. As part of determining grant awards, USAID’s policy requires an applicant to provide a list of all its cost-reimbursement contracts, grants, or cooperative agreements involving similar or related programs during the past three years. The Grant Selection Committee chair must validate the applicant’s past performance reference information based on existing evaluations to the maximum extent possible and must make a reasonable good faith effort to contact all references to verify or corroborate how well an applicant performed.

- For assistance, as required by 2 CFR 200, USAID also does a risk assessment to review an organization’s ability to meet the goals and objectives outlined by the agency. Internal procedures for conducting the risk assessment are found in ADS 303.3.9, with guidance on how to look for evidence of effectiveness from potential grantees. According to ADS, this can be done through reviewing past performance and evaluation/performance reports such as the Contractor Performance Assessment Reporting System (CPARS).

- Even though there is no federal requirement (as there is with CPARS), USAID also assesses grantee past performance for use when making funding decisions (detailed in ADS 303, p. 66). According to USAID’s ADS 303 policy, before making an award of any grant or cooperative agreement, the agreement officer must state in the memorandum of negotiation that the applicant has a satisfactory record of performance. When making the award, the agreement officer may consider withholding authority to proceed to the next phase of a grant until provided evidence of acceptable performance within a given period.

- In its recent report published on September 5, 2018, Managing for Results: Government-wide Actions Needed to Improve Agencies’ Use of Performance Information in Decision Making (GAO-18-609SP), GAO recognized USAID as one of four agencies ( of 23 surveyed) with proven practices for using performance information. Additionally, USAID was the only CFO Act agency with a statistically significant increase in the Agency Use of Performance Information Index since 2007.

- To help vaccinate the world, save lives, and provide critical humanitarian assistance, USAID is programming $5,175,000,000 in COVID-19 global response funds received under the American Rescue Plan Act of 2021 in more than 115 countries. The agency is working with local and international partners, partner governments, and civil society to deliver and distribute vaccines; protect and train health workers; provide support for risk communication and community engagement; support infection prevention and control; strengthen diagnostic and surveillance systems; improve case management to include increasing access to oxygen; deliver emergency food assistance, humanitarian services, supplies, and response training; support continuity of basic services; and mitigate social and economic impacts caused by the pandemic.

8.3 Did the agency use its five largest competitive grant programs to build evidence (e.g., requiring grantees to participate in evaluations)?

- Grantees report on the progress of activities through documentation such as Activity Monitoring, Evaluation, and Learning Plans, periodic performance reporting, and external and internal evaluation reports (if applicable). These reports help USAID remain transparent and accountable and also help it build evidence of what does and does not work in its interventions. Any internal evaluation undertaken by a grantee must also be provided to USAID for learning purposes. All datasets compiled under USAID-funded projects, activities, and evaluations are to be submitted by grantees to the USAID DDL. All final evaluation reports must also be submitted to the agency’s\DEC unless they receive a waiver of the USAID’s public dissemination requirements. These are rare and require the concurrence of the director of the Office of LER.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant programs (besides its five largest grant programs)?

- The agency is actively engaged in utilizing evidence of effectiveness to allocate funds. For example, the DIV program uses a tiered funding approach to find, test, and scale evidence-based innovations. Its grants include stage 1 for piloting (up to $200,000), stage 2 for testing and positioning for scale (up to $1,500,000), stage 3 for transitioning to scale (up to $15,000,000), and evidence generation (up to $1,500,000) for research to determine causal impact of interventions that have already scaled. In particular for stage 2 grants, DIV requires evidence of impact that must be causal and rigorous. The grantee must either have rigorous underlying evidence already established, use this funding to run an evaluation with an evaluation partner, or run an evaluation with its own funding during the grant period.

- As part of the grant awards process, Grand Challenges, such as the Water and Energy for Food Grand Challenge and the earlier Securing Water for Food Grand Challenge, collaborate with innovators to set ambitious results targets and make eligibility for subsequent funding contingent on demonstrated evidence of hitting those targets.

- Development Innovation Ventures’ evaluation criteria for its funding is based on its three core principles as further outlined in its annual grant solicitation (DIV Annual Program Statement): (1) evidence of Impact, (2) cost effectiveness, and (3) potential for scale and financial sustainability. Expectations vary by stage, but every awardee must report against a set of pre-negotiated key performance indicators. Most DIV grants are fixed amount awards, a unique type of federal grant instrument that is tailor-made for pay for results approaches. Fixed amount awards are structured by paying for milestones achieved, which emphasizes performance (not just compliance) and reduces some administrative burden for all parties [2 CFR 200.201(b)].

- Development Innovation Ventures supports innovative solutions across all countries and development sectors in which USAID operates, including education, agriculture, water, energy, and economic development. Since 2010, DIV has provided more than $174,000,000 for 255 grants across forty-seven countries, reaching more than 55,000,000 beneficiaries. Based on recent research announced in October 2020 led by Nobel Prize-winning economist and DIV advisor Dr. Michael Kremer, a subset of grants from DIV’s early portfolio covering 2010-2012 has produced $17 in social benefits for every dollar spent by USAID.

- As USAID pursues its localization agenda, there have been several notices of funding opportunity that have been announced that will include funding for organizations in the countries where USAID works. For example, Civic Engagement in Local Governance for Accountability in Sierra Leone, USAID Education: Equity and Inclusion, New Partnerships Initiative Conflict Prevention and Recovery Program, and Local Entities Advancing and Driving Health Responses (LEADR) Activity.

8.5 What are the agency’s 1-2 strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- Development Innovation Ventures specifically emphasizes rigorous evidence of causal impact in its official grant solicitation (DIV Annual Program Statement, p. 4): “DIV supports the piloting of early stage innovations, funds research to test new and traditional solutions to development challenges, and helps those innovations that have successfully demonstrated impact to transition to scale. DIV looks for different indicators of impact and requires different degrees of rigor in evaluation methodologies depending on the stage of financing that the applicant is seeking and on the innovation’s proposed pathway to scale.”

- Evaluation criteria are based on DIV’s three core principles as further outlined in its Annual Program Statement: (1) evidence of impact, (2) cost effectiveness, and (3) potential for scale and financial sustainability.

- Fenix offers expandable, lease-to-own, solar home systems financed through ultra-affordable installments over mobile money. In 2016, ENGIE Energy Access partnered with USAID’s Scaling Off-Grid Energy Grand Challenge team to support Fenix’s expansion from Uganda into Zambia, a nascent and largely underserved market. By the end of its DIV award, Fenix was the leading solar home system company in Zambia. In 2017, Fenix was acquired by ENGIE, a multinational electric utility company, and expanded into four new countries: Benin, Côte d’Ivoire, Nigeria, and Mozambique. Fenix has delivered clean, affordable energy to 3.5 million people across six countries in Africa.

- EarthEnable is a social enterprise that has developed durable adobe floor replacements for traditional dirt floors. EarthEnable flooring minimizes exposure to bacteria and parasites–particularly for children–and is 70% less expensive than other clean floor alternatives. Early investments by DIV supported EarthEnable to test different business models and scale up operations, expanding its geographic reach and enabling it to serve lower income households. To date, EarthEnable has replaced more than 5,000 dirt floors and served more than 20,000 people in Rwanda and Uganda.

- In 2013, DIV funded a randomized control trial to evaluate evidence for causal impact of the program Teaching at the Right Level, implemented by Pratham, an Indian nongovernmental organization. While progress has been made to help more children attend school, millions of students are not actually learning at their grade level. In response, Teaching at the Right Level Helps lagging students catch up by teaching to their skill level rather than to their age or grade. The approach works by dividing children (generally in grades 3 to 5) into groups based on learning needs rather than age or grade. It dedicates time to basic skills rather than focusing solely on the curriculum and it regularly assesses student performance, not just end-of-year examinations. In 2017, DIV further partnered with J-PAL Africa, UNICEF, USAID/Zambia, and the Zambian Ministry of General Education to scale Teaching at the Right Level across Zambia. To date, DIV’s support has helped catalyze more than $25,000,000 in additional funding beyond USAID to scale the Teaching at the Right Level model to 12 countries across Africa.

- The Intelligent Forecasting Competition incentivized competitors to use the data from health care facilities in Cote d’Ivoire to develop intelligent forecasting methods for family planning commodities and to see if those models outperformed traditional pen-and-paper forecasts. They did. Insights from the prize-winning model are now being tested in a grant to implement intelligent forecasting methods in Cote d’Ivoire’s health facilities. If evidence from the field suggests that intelligent forecasting methods outperform traditional forecasts, this approach will be mainstreamed in USAID’s global health commodities procurements, which exceed $16,000,000,000 dollars in U.S. taxpayer investments.

8.6 Did the agency provide guidance which makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity building efforts?

- The agency’s Program Cycle Policy states that “funding may be dedicated within a project or activity design for implementing partners to engage in an internal evaluation for institutional learning or accountability purposes.”

Score

13

13

AmeriCorps

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, AmeriCorps’ largest competitive grant programs are the following:

- AmeriCorps state and national program (excluding state formula grant funds): $277,694,600; eligible grantees: nonprofit organizations, state governments, tribal governments, local governments, institutions of higher education.

- Senior Corps RSVP program: $53,956,000; eligible grantees: nonprofit organizations, local governments.

8.2 Did the agency use evidence of effectiveness to allocate funds in five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- AmeriCorps’ State and National grants program (excluding state formula grant funds) allocated as many as 44 out of 100 points to organizations that submitted applications supported by performance and evaluation data in FY22. Specifically, up to 24 points can be assigned to applications with theories of change supported by relevant research literature, program performance data, or program evaluation data; up to 20 points can be assigned for an applicant’s incoming level of evidence and the quality of the evidence. Further, in 2022 AmeriCorps prioritized the funding of specific education, economic opportunity, and health interventions with moderate or strong levels of evidence.

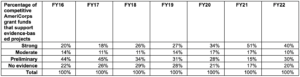

- Since AmeriCorps’ implementation of a scoring process that assigns specific points for level of evidence, the percentage of grant dollars allocated to strong, moderate, preliminary, and no evidence categories has shifted over time (see table below) such that more grant dollars were awarded to applicants with strong and moderate levels of evidence for proposed interventions, and fewer grant dollars were awarded to applicants with little to no evidence of effectiveness.

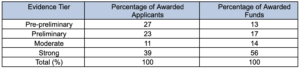

- In the education focus area, exhibit 1 (below) reveals that half (50%) of awarded applicants provided moderate or strong evidence, accounting for 70% of allocated funds in these groups. Across all evidence tiers, the pre-preliminary group, at 27%, was second only to the strong group in terms of percentage of awarded applicants. The pre-preliminary group had the smallest fund allocation equivalent to 13% of awarded funds.

Exhibit 1: Percentage of Awarded Applicants and Awarded Funds by Evidence Tier, ASN Awarded Applicants in Education Focus Area (n = 79)

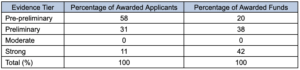

- The exhibit below shows that, in the economic opportunity focus area, a majority of awarded applicants (58%) provided pre-preliminary evidence and received 20% of the total allocated funds. The next highest awarded evidence tier was preliminary at 31%, which received 38% of allocated funds. Eleven percent of applicants provided strong evidence and this tier received the highest allocation of funds at 42%.

Exhibit 2: Percentage of Awarded Applicants and Awarded Funds by Evidence Tier, ASN Awarded Applicants in Economic Opportunity Focus Area (n = 34)

- The AmeriCorps Seniors program staff are discussing the most feasible way to integrate research evidence into the RSVP grant competition. The program director and director of ORE have discussed the idea of requiring RSVP grantees to use best practices for volunteer management. In addition, staff have discussed ways to inform station-level volunteer management research on managing multiple life roles.

- Given the organizational capacity of many of the RSVP grantees and the size of the grants AmeriCorps awards to these organizations, supporting the integration of research-based practice as part of the grant’s performance expectations and reporting seems like the most reasonable approach to evidence-based grant making for this program. If AmeriCorps Seniors pursues this strategy, the program will provide technical assistance to the RSVP grantees and provide a possible pathway to certification [e.g., Council for Certification in Volunteer Administration (CCVA)]. Embedding evidence-based practices into organizational operations may also ensure that AmeriCorps resources remain accessible to communities and community-based organizations most in need of national service.

8.3 Did the agency use its five largest competitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- AmeriCorps state and national grantees are required to evaluate their programs as part of the grant’s terms and conditions. Grantees receiving more than $500,000 are required to conduct an independent external evaluation (see p. 27 of the FY22 notice of funding for a description of these requirements). AmeriCorps program models vary. The AmeriCorps state and national program model relies on competitive and formula grants that are used by organizations to sponsor national service corps members. These grants are managed by state commissions. Members support projects (or program interventions) delivered by their sponsor organizations; these projects are designed to improve educational, employment, health, and environmental outcomes in their communities. Projects (or program interventions/models) are well-defined with respect to their target populations, their core activities/components including dosage and modalities, and their intended outcomes. Program participation is also typically well-defined and occurs within a specified period of time with clear beginning and end dates. As such, these models are highly amenable to program evaluations that rely on research designs capable of causal attributions.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant program (besides its five largest grant programs)?

- AmeriCorps administers only two competitive grant programs, described above.

8.5 What are the agency’s 1-2 strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- AmeriCorps has summarized the accomplishments of its competitive grant programs in a series of research briefs that describe the core components of effective interventions in the areas of education, economic opportunity, environmental stewardship, and health. The education brief was used to justify the FY19 funding priority for evidence-based interventions in the AmeriCorps state and national competition. All interventions described in these briefs illustrate how AmeriCorps’ competitive grant recipients have achieved better outcomes and built knowledge about what works. The agency released additional return-on-investment studies in FY22, all of which had positive findings.

8.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity building efforts?

- AmeriCorps’ state and national grantees, including city, county, tribal, and state governments, are required to use their AmeriCorps funds to evaluate their programs. In FY22, AmeriCorps awarded $ 8.5 million for the 2022 Terms and Conditions for Training and Technical Assistance Commission Investment Fund Grants that support state commissions, which are typically housed within state government. Approximately one-third of these grants will focus on building the capacity of state commissions and their grantees to collect and use performance and evaluation data.

- AmeriCorps’ Evidence Exchange includes a suite of scaling products to help grantees replicate evidence-based interventions.

Score

10

10

U.S. Department of Labor

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, the five largest competitive grant programs and their appropriation amounts were:

- Senior Community Service Employment Program: approximately $401,000,000 in continuation funds; eligible applicants are non-profit organizations, federal agencies, and tribal organizations.

- Apprenticeship Building America Grants: approximately $122,000,000; eligible applicants are nonprofits, labor organizations, public and state institutions of higher education, and county governments.

- National Farmworker Jobs Program: approximately $95,000,000 in continuation funds; eligible applicants are entities with an understanding of the problems of eligible migrant and seasonal farmworkers.

- YouthBuild: approximately $90,000,000: eligible applicants are public and private nonprofit agencies.

- Indian and Native American Program Employment and Training Grants: approximately $72,000,000; eligible applicants generally include federally recognized Indian tribes, tribal organizations as defined in 25 U.S.C. 450b, Alaska-Native-controlled organizations, Native-Hawaiian-controlled organizations, and Indian-controlled organizations as defined at 20 CFR 684.130.

- During the summer of 2021, ETA held a series of stakeholder listening sessions focused on grant equity in an effort to establish a baseline understanding of potential barriers to greater equity in the mix of grant applicants, peer reviewers, awardees, and communities served. This information will help inform future grant making decisions.

8.2 Did the agency use evidence of effectiveness to allocate funds in its five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- YouthBuild applicants are awarded points based on past performance; these metrics are viewed as important to demonstrating successful career outcomes for youth. As a pre-apprenticeship program that prepares young people for the construction industry and other in-demand industries, YouthBuild supports the evidence-based national strategy of apprenticeship.

- Other competitive grant programs that score applications for past performance and use of evidence-informed strategies are the Senior Community Service Employment Program and the National Farmworker Jobs Program.

8.3 Did the agency use its five largest competitive grant programs to build evidence (e.g., requiring grantees to participate in evaluations)?

- All five of DOL’s largest grant programs may be involved in evaluations designed by the Chief Evaluation Office and the relevant DOL agencies. In each case DOL requires or encourages (through language in the funding announcement and proposal review criteria) grantees to use evidence-based models or strategies in grant interventions and/or to participate in an evaluation, especially to test new interventions that theory or research suggest are promising.

- The department has recently launched a multi-year implementation study of the Senior Community Service Employment Program as well as other workforce programs for older workers to build the evidence base on these programs and identify future research options. There are options for more rigorous evaluations in the contract as appropriate.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant program (besides its five largest grant programs)?

- The Department of Labor includes requirements of demonstrated effectiveness in the allocation of funds, as well as the commitment to building new evidence in order to receive funds, both of which are of equal importance given that many DOL-funded programs lack a sufficient body of evidence to support only those that are already evidence-based. For example, among recent ETA competitive grant programs, this has involved requiring: (1) demonstration of an approach as being evidence-based or promising for receipt of funds (i.e., Reentry Employment Opportunities Funding Opportunity Announcement) or for potential to receive additional funds (i.e., TechHire); (2) an independent third party local or grantee evaluation with priority incentives for rigorous designs (e.g., tiered funding, scoring priorities, and bonus scoring for evidence-based interventions or multi-site rigorous tests); or (3) full participation in an evaluation as well as rigorous grantee (or local) evaluations (e.g., American Apprenticeship Initiative, America’s Promise Job-Driven Grant Program, and the Strengthening Community College Training Grants). Additionally, applicants for ILAB’s competitive funding opportunities are required to conduct and/or participate in evaluations as a condition of award. The department is also conducting an evaluation of the Pathway Home grant program, which builds on what was learned from the Linking Employment Activities Pre-release (LEAP) program evaluation. This evaluation will build knowledge about the grant models and include the development of a feasibility and design options paper for implementation and impact evaluations.

8.5 What are the agency’s one or two strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- In 2015, DOL funded an evaluation of the 36-month LEAP Program that included an implementation study of LEAP pilot programs that provided jail-based American Job Centers (AJCs) to individuals preparing to reenter society after time in jail. The findings of the evaluation identified many promising practices for offering both pre- and post-release services and were published in 2018 (see the Final Report and Issue Brief Compendium). Starting in 2020, DOL has funded Pathway Home Pilot Projects and accompanying evaluation that builds on lessons learned from the LEAP program by providing workforce services to incarcerated individuals pre- and post-release. For example, the Pathway Home grant requirement for participants to maintain the same caseworker pre- and post-release was suggested as a promising practice in the LEAP Implementation Study.

- The department funded a national evaluation of the Trade Adjustment Assistance Community College and Career Training grant program, which was a $1,900,000,000 initiative consisting of four rounds of grants, from 2011 to 2018. The grants were awarded to institutions of higher education (mainly community colleges) to build their capacity to provide workforce education and training programs. The implementation study assessed the grantees’ implementation of strategies to better connect and integrate education and workforce systems, address employer needs, and transform training programs and services to adult learners. The synthesis identified key implementation and impact findings based on a review of evaluation reports completed by grantees’ third party evaluators. The outcomes study examined the training, employment, earnings, and self-sufficiency outcomes of nearly 2,800 participants from nine grants in Round 4. Findings from these studies provide evidence-based practices and insights that are being applied to the three rounds of Strengthening Community College Training Grants Funding Opportunity Announcements in 2021 and 2022, as well as future DOL investments.

8.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity-building efforts?

- The Department of Labor has a formal Evaluation Policy. Guidance on using funds to conduct and/or participate in program evaluations and/or to strengthen their evaluation building efforts can be found in each grant funding opportunity; this use of funds is a condition of many grants. The Special Program Requirements section of the respective grant funding opportunity notifies grantees of this responsibility. Generally, this section states that “As a condition of grant award, grantees are required to participate in an evaluation”, if undertaken by DOL. The evaluation may include an implementation assessment across grantees, an impact and/or outcomes analysis of all or selected sites within or across grantees, and a benefit/cost analysis or assessment of return on investment. Conducting an impact analysis could involve random assignment [i.e, random assignment of eligible participants into a treatment group that would receive program services or enhanced program services or into control group(s) that would receive no program services or program services that are not enhanced].

- The department may require applicants to collect data elements to aid the evaluation. As a part of the evaluation, as a condition of award, grantees must agree to (1) make records on participants, employers, and funding available to the evaluation contractor; (2) provide access to program operating personnel, participants, and operational and financial records, and any other pertinent documents to calculate program costs and benefits; (3) in the case of an impact analysis, facilitate the assignment by lottery of participants to program services (including the possibility of increased recruitment of potential participants); and (4) follow evaluation procedures as specified by the evaluation contractor under the direction of DOL, including after the period of operation. After award, grantees will receive detailed guidance on ETA’s evaluation methodology, including requirements for data collection. Grantees will receive technical assistance to support their participation in these activities.

Score

7

7

Administration for Children and Families (HHS)

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, the five largest competitive grant programs are:

- Head Start: $11,000,000,000; eligible applicants: public or private nonprofit organizations, including community-based and faith-based organizations, or for-profit agencies);

- Unaccompanied Children Services: $8,000,000,000; eligible applicants: private nonprofit and for-profit agencies;

- Refugee Support Services: $307,000,000; eligible applicants: states (via formula), private nonprofit, and for-profit agencies;

- Preschool Development Grants: $290,000,000; eligible applicants: states;

- Healthy Marriage Promotion and Responsible Fatherhood Grants: $149,000,000; eligible applicants: states, local governments, tribal entities, and community-based organizations, both for profit and nonprofit, including faith-based).

8.2 Did the agency use evidence of effectiveness to allocate funds in five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- The Head Start Designation Renewal System (DRS) determines whether Head Start/Early Head Start grantees are delivering high-quality comprehensive services to the children and families that they serve. These determinations are based on seven conditions, one of which looks at how Head Start classrooms within programs perform on the Classroom Assessment Scoring System (CLASS), an observation-based measure of the quality of teacher-child interactions. When the DRS deems that they are underperforming, grantees are denied automatic renewal of their grant and must apply for funding renewal through a standard open competition process. In the most recent language, grantees who are re-competing for Head Start funds must include a description of any violations, such as deficiencies, areas of noncompliance, and/or audit finding in their record of past performance (p. 28). Applicants may describe the actions they have taken to address these violations. According to Head Start policy, in competitions to replace or potentially replace a current grantee, the responsible HHS official will give priority to applicants that have demonstrated capacity in providing effective, comprehensive, and well-coordinated early childhood education and development services and programs.

8.3 Did the agency use its five largest competitive grant programs to build evidence (e.g., requiring grantees to participate in evaluations)?

- The Administration for Children and Families’ template (see p. 14 in Attachment C) for competitive grant announcements includes standard language that funding opportunity announcement drafters may select to require grantees to either (1) collect performance management data that contribute to continuous quality improvement and are tied to the project’s logic model or (2) conduct a rigorous evaluation for which applicants must propose an appropriate design specifying research questions, measurement, and analysis.

- As a condition of award, Head Start grantees are required to participate fully in ACF-sponsored evaluations if selected to do so. As such, ACF has an ongoing research portfolio that is building evidence in Head Start. Research sponsored through Head Start funding over the past decade has provided valuable information not only to guide program improvement in Head Start itself, but also to guide the field of early childhood programming and early childhood development. Dozens of Head Start programs have collaborated with researchers in making significant contributions in terms of program innovation and evaluation, as well as the use of systematic data collection, analysis, and interpretation in program operations.

- The Administration for Children and Families’ 2020 HMRF Grants established required evidence activities by scope of grantee services. For example, large scope services (requesting funding between $1,000,000 and $1,500,000) “must propose a rigorous impact evaluation (i.e., randomized controlled trial (RCT) or high-quality, quasi-experimental design (QED) study) . . . and must allocate at least 15%, but no more than 20%, of their total annual funding for evaluation”. Regardless of their scope of services, all 2020 HMRF grantees must plan for and carry out continuous quality improvement activities and conduct a local evaluation or participate in a federally led evaluation or research effort. ACF has an ongoing research portfolio building evidence related to Strengthening Families, Healthy Marriage, and Responsible Fatherhood, and has conducted randomized controlled trials with grantees in each funding round of these grants.

- ACF reviewed performance data from the 2015 cohort of HMRF grantees (using the nFORM system) to set priorities, interests, and expectations for HMRF grants that were awarded in 2020. For example, because nFORM data indicated that organizations were more likely to meet enrollment targets and engage participants when they focused on implementing one program model, ACF’s 2020 funding opportunity announcement, which led to 113 HMRF grant awards in September 2020, mentioned specific interest in grantee projects “that implement only one specific program model designed for one specific youth service population.”

- In its award decisions, ACF gave “preference to those applicants that were awarded a Healthy Marriage or Responsible Fatherhood grant between 2015 and 2019, and that (a) [were] confirmed by ACF to have met all qualification requirements under Section IV.2, The Project Description, Approach, Organizational Capacity of this FOA; and (b) [were] confirmed by ACF to have received an acceptable rating on their semi-annual grant monitoring statements during years three and four of the project period. [ACF gave] particular consideration to applicants that: (1) designed and successfully implemented, through to end of 2019, an impact evaluation of their program model, and that the impact evaluation was a fair impact test of their program model and that was not terminated prior to analysis; or (2) successfully participated in a federally-led impact evaluation”.

- The Administration for Children and Families also evaluated HMRF grant applicants based upon their capacity to conduct a local impact evaluation and their proposed approach (for applicants required or electing to conduct local evaluations); their ability to provide a reasonable rationale and/or research base for the program model(s) and curriculum(a) proposed; and their inclusion of a continuous quality improvement plan, clearly describing the organizational commitment to data-driven approaches to identify areas for program performance, testing potential improvements, and cultivating a culture and environment of learning and improvement, among other things. Further, The compliance and performance reviews (CAPstone) entail a thorough review of each grantee’s performance. The Office of Family Assistance (OFA) sends a formal set of questions about grantee performance that the grant program specialists and technical assistance providers answer ahead of time, and then they convene meetings where the performance of each grantee is discussed by OFA, OPRE, and the technical assistance provider at length using nFORM data and the answers to the formal questions mentioned above.

- The 2003 Reauthorization of the Runaway and Homeless Youth Act called for a study of long-term outcomes for youth who are served through the Transitional Living Program. In response, ACF is sponsoring a study that will capture data from youth at program entry and at intermediate and longer term follow-up points after program exit and will assess outcomes related to housing, education, and employment. Additionally, ACF is sponsoring a process evaluation of the 2016 Transitional Living Program Special Population Demonstration Project.

- The Administration for Children and Families manages the Runaway and Homeless Youth Training and Technical Assistance Center (RHYTTAC), the national entity that provides resources and direct assistance to the RHY grantees and other youth serving organizations eligible to receive RHY funds. This training and technical assistance center disseminates information about and supports grantee implementation of high-quality, evidence-informed, and evidence-based practices. In the most recent RHYTTAC grant award, applicants were evaluated based on their strategy for tracking RHY grantee uptake and implementation of evidence-based or evidence-informed strategies. Additionally, as described in the FY22 Transitional Living Program funding opportunity announcement, successful applicants must train all staff and volunteers on evidence-informed practices and provide case management services that include the development of service and treatment plans employing evidence-informed strategies.

- The Administration for Children and Families also evaluates Unaccompanied Children Services, Preschool Development Grants, and RHY grant applicants based upon their proposed program performance evaluation plan how their data will contribute to continuous quality improvement, and their demonstrated experience with comparable program evaluation, among other factors.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant programs (besides its five largest grant programs)?

- ACF’s Personal Responsibility Education Program includes three individual discretionary grant programs that fund programs exhibiting evidence of effectiveness, innovative adaptations of evidence-based programs, and promising practices that teach youth about abstinence and contraception to prevent pregnancy and sexually transmitted infections.

- To receive funding through ACFs Sexual Risk Avoidance Education program, applicants must cite evidence published in a peer-reviewed journal and/or a randomized controlled trial or quasi-experimental design to support their chosen interventions or models.

8.5 What are the agency’s 1-2 strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- As mentioned above, ACF is conducting a multipronged evaluation of the Health Profession Opportunity Grants Program . Findings from the first cohort of HPOG grants influenced the funding opportunity announcement for the second round (HPOG 2.0) funding. ACF used findings from the impact evaluation of the first cohort of HPOG grants to provide insights to the field about which HPOG program components are associated with stronger participant outcomes. For example, based on the finding that many participants engaged in short-term training for low-wage, entry-level jobs, the HPOG 2.0 funding opportunity announcement more carefully defined the career pathways framework, described specific strategies for helping participants progress along a career pathway, and identified and defined key HPOG education and training components. Applicants were required to more clearly describe how their program would support career pathways for participants. Based on an analysis, which indicated limited collaborations with health care employers, the HPOG 2.0 funding opportunity announcement required applicants to demonstrate the use of labor market information, consult with local employers, and describe their plans for employer engagement. It also placed more emphasis on providing basic skills education and assessment of barriers to make the programs accessible to clients who were most prepared to benefit, based on the finding that many programs were screening out applicants with low levels of basic literacy, reading, and numeracy skills.

- The Administration for Children and Families’ Personal Responsibility Education Innovative Strategies Program grantees must conduct independent evaluations of their innovative strategies for the prevention of teen pregnancy, births, and sexually transmitted infections, supported by ACF training and technical assistance. These rigorous evaluations are designed to meet the HHS Teen Pregnancy Prevention Evidence-Based Standards and are expected to generate lessons learned so that others can benefit from these strategies and innovative approaches.

- In 2019, ACF awarded two child welfare discretionary grants to build knowledge of what works. Regional Partnership Grants to Increase the Well-Being of, and to Improve the Permanency Outcomes for, Children and Families Affected By Opioids and Other Substance Abuse aim to build evidence on the effectiveness of targeted approaches that improve outcomes for children and families affected by opioids and other substance use disorders. To this end, grantees will evaluate their local program; select and report on performance indicators that align with proposed program strategies and activities; and participate in a national cross-site evaluation that will describe outcomes for children, adults, and families enrolled in regional partnership grant projects as well as the outcomes of the partnerships. Grants for Community Collaboratives to Strengthen and Preserve Families will support the development, implementation, and evaluation of primary prevention strategies to improve the safety, stability, and well-being of all families through a continuum of community-based services and supports. Projects will include both process and outcome evaluations.

8.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity building efforts?

- The template for ACF’s competitive grant announcements includes standard language instructing grantees to conduct evaluation efforts. Program offices may use this template to require grantees to collect performance data or conduct a rigorous evaluation. Applicants are instructed to include third-party evaluation contracts in their proposed budget justifications.

- ACF’s 2020 HMRF grants established required evidence activities by scope of grantee services. For example, large scope services (requesting funding between $1,000,000 and $1,500,000) “must propose a rigorous impact evaluation (i.e., randomized controlled trial (RCT) or high-quality, quasi-experimental design (QED) study) . . . and must allocate at least 15%, but no more than 20%, of their total annual funding for evaluation”. Regardless of their scope of services, all 2020 HMRF grantees must plan for and carry out continuous quality improvement activities and conduct a local evaluation or participate in a federally led evaluation or research effort.

- ACF’s 2018 Preschool Development Grants funding announcement notes that “it is intended that States or territories will use a percentage of the total amount of their [renewal] grant award during years two through four to conduct the proposed process, cost, and outcome evaluations, and to implement a data collection system that will allow them to collect, house, and use data on the populations served, the implementation of services, the cost of providing services, and coordination across service partners.”

- ACF’s rules (section 1351.15) allow RHY grant awards to be used for data collection and analysis.

- Regional Partnership Grants require a minimum of 20% of grant funds to be spent on evaluation elements. To support the evaluation capacity of RPG grantees, ACF has provided technical assistance for data collection, performance measurement, and continuous quality improvement; implementation of the cross-site evaluation; and knowledge dissemination. It has also provided group technical assistance via webinars and presentations.

- Grants for Community Collaboratives to Strengthen and Preserve Families (CCSPF) grants (p. 7) require a minimum of 10% of grant funds to be used on data collection and evaluation activities. ACF has supported the evaluation capacity of CCSPF grantees by providing technical assistance for developing research questions, methodologies, process and outcome measures; implementing grantee-designed evaluations and continuous quality improvement activities; analyzing evaluation data; disseminating findings; and supporting data use in project and organizational decision-making processes.

- ACF also provides evaluation technical assistance to grantees:

- to support grantees participating in federal evaluations (e.g., projects supporting grantees from Health Profession Opportunity Grants 2.0 and Tribal Health Profession Opportunity Grants 2.0); and

- to support grantees conducting their own local evaluations (e.g., projects supporting Healthy Marriage and Responsible Fatherhood grantees, PREP grantees, and YARH grantees.

Score

8

8

Substance Abuse and Mental Health Services Administration

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, the five largest competitive grant programs are listed below. Each grant program notice of funding opportunity outlines eligibility criteria. For the top five funded grant programs listed below, the following entities are eligible: state, U.S. territories and tribal entities, state departments of education, counties, cities and towns, and community behavioral health clinics and community based behavioral health clinics.

- State Opioid Response (SOR) Grant: $1,500,000,000;

- Certified Community Behavioral Health Clinic: $287,000,000;

- Strategic Prevention Framework (SPF): $127,000,000;

- Children’s Mental Health Services: $125,000,000;

- Project AWARE (Advancing Wellness and Resiliency in Education): $120,000,000.

8.2 Did the agency use evidence of effectiveness to allocate funds in its five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- In FY22, SAMHSA used the conclusions and recommendations from its 2021 Evaluation Report, Combating Opioid Misuse: Findings from the Evaluation Report of the Strategic Prevention Framework—Prescription Drug (SPF-Rx), based on a cross-site evaluation that involved the participation of all grantees, to inform the next round of competitive discretionary grants. The evaluation provided evidence of increased access and utilization of professional use prescription drug monitoring program data as well as evidence that the grant program resulted in new community prevention coalitions and partnerships and an association with reductions in some of the negative consequences of prescription opioid misuse.

- Substance Abuse and Mental Health Services Administration grants are intended to fund services or practices that have a demonstrated evidence base and that are appropriate to the population(s) of focus. As with all SAMHSA grants, the five largest competitive grants programs require applicants to include EBPs and activities that are backed by science. The allocation of funds is based on an application that includes a request for evidence of effective work in reducing substance use and mental health disorders. Two competitive grants have mechanisms that allocate funds toward evidence-based practices include:

- Certified Community Behavioral Health Clinic Expansion Grants requires applicants to describe their proposed evidence-based service/practice. The grantee must describe how the EBP meets the population(s) needs and the outcomes to be achieved. Grantees must also indicate how their practice might be modified and reasons for such modifications.

- Project AWARE requires grantees to identify EBPs selected to be implemented in the required number (3) of local education agencies/communities. They must also describe how the EBPs selected are effective and appropriate for school-aged youth.

8.3 Did the agency use its five largest competitive grant programs to build evidence (e.g., requiring grantees to participate in evaluations)?

- In its draft evaluation plan, SAMHSA evaluates some of the largest competitive grant programs. These evaluations will inform and enable SAMHSA to build evidence. One mechanism for this is through the grantmaking process. In some grants, SAMHSA includes additional terms and conditions that state, depending on the funding opportunity and grant application, that a grantee may be asked to participate in a cross-site evaluation.

- All grant programs at SAMHSA are required to submit data on race, ethnicity, gender, and sexual orientation (among other demographic data). In addition, SAMHSA’s surveys collect national data in these areas, allowing SAMHSA’s Office of Behavioral Health Equity to utilize federal and community data to identify, monitor, and respond to behavioral health disparities.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant programs (besides its five largest grant programs)?

- Nearly all the largest grant programs in FY21 were again at the top of the list for funding in FY22 (Project AWARE is in the top five for FY22). Specification of evidence-based practices, along with discussion of how each EBP chosen is appropriate for the population(s) of focus and the outcomes identified, typically comprises a substantial proportion of the evaluative criteria for grant applications [e.g., 25% for Project AWARE (see Section C)]. Grants other than the largest also require that proposed programs use EBPs and ensure that this requirement is met through substantial point values assigned in the course of objective peer reviews (e.g., Infant and Early Childhood Mental Health Program). All grantees are required to submit National Outcome Measurement Systems (NOMs) data as well as narrative final reports outlining successes, challenges, and innovation. These reports and quantitative data are reviewed by dedicated government project officers (GPOs), and program leadership grantees are provided technical assistance and guidance on how to approve their work, when applicable.

- In addition, SAMHSA grantees are required to submit data on race, ethnicity, gender, and sexual orientation (among other demographic data) as well as data on social determinants of health (such as access to stable housing, employment, and education status). SAMHSA’s surveys collect national data in these areas, allowing its Office of Behavioral Health Equity, to utilize federal and community data to identify, monitor, and respond to behavioral health disparities.

8.5 What are the agency’s one or two strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- Competitive grant programs are required to consider EBPs in their application and are referred to SAMHSA’s EBPRC for tools they need to achieve better outcomes based on what works. An additional example may be found in SAMHSA’s trauma and justice portfolio, which provided a comprehensive public health approach to addressing trauma and establishing a trauma-informed approach in health, behavioral health, human services, and related systems. The intent of this initiative was to reduce both the observable and less visible harmful effects of trauma and violence on children and youth, adults, families, and communities. As part of this initiative, the SPARS team presented the video series A Trauma-Informed Approach to Data Collection, with commentary from subject matter experts and clientele from the People Encouraging People program in Baltimore, MD. This series advised grantees and GPOs about using a trauma-informed approach to collecting client-level data.

- Another example is EBPs that SOR grantees have implemented focused on safe prescribing of naloxone and medication for opioids use disorder to help support and build knowledge around the use of these EBPs. In FY22, State Opioid Response grantees are using funds to utilize fentanyl test strips. This recent change under the Biden-Harris Administration will help to build knowledge of the utility of these EBPs.

8.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity-building efforts?

- Grantees are encouraged to allocate grants funds for data collection, data analysis, and program evaluation. Some grantees hire external evaluators using grant funds to assist them in the evaluation process. For example, one funding announcement requires the applicant to “provide specific information about how you will collect the required data for this program and how the data will be utilized to manage, monitor and enhance the program.” In addition, up to 20% of the total grant award for the budget period may be used for data collection, performance measurement, and performance assessment expenses.

Score

10

10

U.S. Dept. of Housing & Urban Development

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, HUD’s five largest competitive grant programs are:

- Continuum of Care: $3,300,000,000; eligible grantees: state and local governments and coalitions;

- Lead-Hazard Reduction ($520,800,000 million; eligible grantees: local governments;

- Choice Neighborhoods (Planning and Implementation): $350,000,000; eligible grantees: state and local governments;

- Native American Housing Block Grant-Competitive Grant Program: $150,000,000; eligible grantees: Native American tribal governments and tribal organizations;

- Resident Opportunity and Self-Sufficiency Service Coordinator Program: $35,000,000; eligible grantees: Native American tribal governments and tribal organizations; public housing agencies(PHAs)/Indian housing authorities, nonprofit organizations; resident associations.

8.2 Did the agency use evidence of effectiveness to allocate funds in its five largest competitive grant programs? (e.g., Were evidence-based interventions/practices required or suggested? Was evidence a significant requirement?)

- Three of HUD’s five largest competitive grants allocated funds to evidence-based practices and programs:

The Continuum of Care Program (CoC) provides up to 10 points to grantees that propose programs with Housing First approaches, an evidence-based practice. The application states that CoCs must demonstrate at least 75% of all project applications that include housing activities (e.g., permanent housing, safe haven) submitted under this Notice of Funding Opportunity are using the Housing First approach by providing low barrier projects that do not have service participation requirements or preconditions to entry and prioritize rapid placement and stabilization in permanent housing. In addition, CoCs must describe what tools and methods are used to regularly evaluate projects to ensure those that commit to following a Housing First approach are maintaining fidelity to a Housing First approach in implementing their project, which must include a process to review fidelity to a Housing First approach outside of the local CoC competition rating and ranking process. In efforts to promote equity, HUD (1) requires CoC applicants to explicitly outline how marginalized communities will be engaged in the planning process, (2) encourages project managers to hire individuals with lived experience with homelessness, and (3) pushes applicants for capital investment projects to commit to and demonstrate plans to hire low-income individuals. - The Lead Hazard Reduction Grant Program is designed to maximize the number of children under the age of six protected from lead poisoning by strategically targeting lead reduction efforts to neighborhoods where children are at greatest risk. The FY22 grants require grantees to use evidence-based lead hazard control methods; meet cost savings, effectiveness, and grant compliance benchmarks; and gather pre- and post-treatment data to support and validate their investments. The application assigns 40 points (out of 102) based on grantees’ organizational capacity and relevant experience. Past research showing large returns on investment supported HUD’s decision to request a 31% increase in program funding for FY22.

- Choice Neighborhood Implementation Grants uses a point system to determine award recipients. Of the 105 total points possible, 21 points are available in criteria demonstrating previous performance and 15 points are awarded for soundness of approach.

8.3 Did the agency use its five largest competitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- As a condition of grant award, all HUD competitive grantees are required to cooperate in any HUD-sponsored research or evaluation studies as a condition of funding.

- The Continuum of Care Program is supported by the National Homeless Data Analysis Project, which provides communities with resources to improve data collection and consistent reporting about individuals experiencing homelessness to support national Annual Homeless Assessment Reports.