2022 Federal Index

AmeriCorps

9

Leadership

Did the agency have senior staff members with the authority, staff, and budget to build and use evidence to inform the agency’s major policy and program decisions in FY22?

1.1 Did the agency have a senior leader with the budget and staff to serve as the agency’s evaluation officer or equivalent (example: Evidence Act 313)?

- The director of ORE serves as the AmeriCorps evaluation officer and oversees ORE’s FY22 $4,120,000 budget and a staff of 15. On average, the agency has invested between $1,000,000 to $1,500,000 in the ORE staff over the past 10 years. More information about ORE can be found here.

1.2 Did the agency have a senior leader with the budget and staff to serve as the agency’s chief data officer or equivalent [example: Evidence Act 202(e)]?

- AmeriCorps hired its first chief data officer in FY21. The chief data officer began addressing long-standing data asset management priorities in FY22 including building the agency’s data analytics capacity as well as developing a process/structure to ensure coordination and collaboration across data integrity/management, data for performance and data for research and evaluation.

1.3 Did the agency have a governance structure to coordinate the activities of its evaluation officer, chief data officer, statistical officer, performance improvement officer, and other related officials in order to support Evidence Act implementation and improve the agency’s major programs?

- AmeriCorps has a Research & Evaluation Council that meets monthly to assess progress in implementing the agency’s learning agenda and evaluation plan. Members of the council include the director of ORE, the chief information officer/chief data officer, and the chief of staff, as well as the chief of program operations and the chief operating officer.

9

Evaluation & Research

Did the agency have an evaluation policy, evaluation plan, and learning agenda (evidence building plan), and did it publicly release the findings of all completed program evaluations in FY22?

2.1 Did the agency have an agency-wide evaluation policy [example: Evidence Act 313(d)]?

- AmeriCorps has an evaluation policy that presents five key principles that govern the agency’s planning, conduct, and use of program evaluations: rigor, relevance, transparency, independence, and ethics.

2.2 Did the agency have an agency-wide evaluation plan? [example: Evidence Act 312(b)]?

- AmeriCorps developed and implemented its FY22-26 strategic plan as well as its learning agenda, which describes anticipated evidence generated from ongoing and planned evaluations.

2.3 Did the agency have a learning agenda (evidence building plan) and did the learning agenda describe the agency’s process for engaging stakeholders, including but not limited to the general public, state and local governments, and researchers/academics, in the development of that agenda (example: Evidence Act 312)?

- AmeriCorps uses the terms learning agenda, evaluation plan, and strategic evidence building plan synonymously. AmeriCorps has an evergreen learning agenda. The plan was updated and approved by the U.S. Office of Management and Budget (OMB) in FY22. As part of its stakeholder engagement process for the strategic plan, AmeriCorps invited stakeholders to provide input on the learning agenda. Listening sessions included the learning agenda. AmeriCorps State and National also invited state commissions to share feedback through various calls. Internal stakeholders (e.g., staff working with grantees) may provide feedback on the learning agenda using a link on the Office of Research and Evaluation’s SharePoint home page.

2.4 Did the agency publicly release all completed program evaluations?

- All completed evaluation reports are posted to the Evidence Exchange, an electronic repository for evaluation studies and other reports. This virtual repository was launched in September 2015.

2.5 Did the agency conduct an Evidence Capacity Assessment that addressed the coverage, quality, methods, effectiveness, and independence of the agency’s evaluation, research, and analysis efforts [example: Evidence Act 315, subchapter II (c)(3)(9)]?

- A comprehensive portfolio of research projects has been built to assess the extent to which AmeriCorps is achieving its mission. As findings emerge, future studies are designed to continuously build the agency’s evidence base. The Office of Research & Evaluation relies on scholarship in relevant fields of academic study; a variety of research and program evaluation approaches including field, experimental, and survey research; multiple data sources including internal and external administrative data; and different statistical analytic methods. AmeriCorps relies on partnerships with universities and third party research firms to ensure independence and access to state-of-the-art methodologies. It supports its grantees with evaluation technical assistance and courses to ensure that their evaluations are of the highest quality, and it requires grantees receiving $500,000 or more in annual funding to engage an external evaluator. These efforts have resulted in a robust body of evidence that allows: (1) national service participants to experience positive benefits, (2) nonprofit organizations to be strengthened, and (3) national service programs to effectively address local issues (along with a suite of AmeriCorps resources for evaluations).

- While AmeriCorps is a non-CFO agency and is therefore not required to comply with the Evidence Act, including the mandated Evidence Capacity Assessment, it procured a third party to support analysis of its workforce capacity inclusive of its evaluation, research and statistical and analysis workforce capacity. Findings from this workforce analysis will be submitted to the agency in FY22 and used for continuous improvement.

2.6 Did the agency use rigorous evaluation methods, including random assignment studies, for research and evaluation purposes?

- AmeriCorps uses the research design most appropriate for addressing the research question. When experimental or quasi-experimental designs are warranted, the agency uses them and encourages its grantees to use them, as noted in the agency evaluation policy: “AmeriCorps is committed to using the most rigorous methods that are appropriate to the evaluation questions and feasible within statutory, budget and other constraints.” As of August 2022, AmeriCorps has received 47 grantee evaluation reports that use experimental design and 144 that use quasi-experimental design.” AmeriCorps has also funded a mixed-methods longitudinal study of National Civilian Community Corps (NCCC) members that includes a matched comparison group. This member development study will conclude in FY23.

10

Resources

Did the agency invest at least 1% of program funds in evaluations in FY22 (examples: Impact studies; implementation studies; rapid cycle evaluations; evaluation technical assistance, rigorous evaluations, including random assignments)?

3.1 ____ invested $____ on evaluations, evaluation technical assistance, and evaluation capacity-building, representing __% of the agency’s $______ million FY22 budget.

- AmeriCorps invested $9,420,000 on evaluations, evaluation technical assistance, and evaluation capacity-building, representing 1.1% of the agency’s $865,409,000 FY22 operating budget.

3.2 Did the agency have a budget for evaluation and how much was it? (Were there any changes in this budget from the previous fiscal year?)

- In FY22, Congress allocated $4,120,000 to AmeriCorps for its evaluation budget. This is an increase of $120,000 compared to the agency’s FY21 allocation.

3.3 Did the agency provide financial and other resources to help city, county, and state governments or other grantees build their evaluation capacity (including technical assistance funds for data and evidence capacity building)?

- The Office of Research & Evaluation funds a contractor to provide AmeriCorps grantees with evaluation capacity building support ($918,169 of the $4,120,000 evaluation budget). Staff from this office are also available to state commissions to address their evaluation questions and make resources (e.g., research briefs summarizing effective interventions, online evaluation planning, and reporting curricula) available to them and the general public. AmeriCorps awards investment fund grants to state commissions ($8,500,000 in FY22, of which approximately one-third will be used for data and evidence capacity building activities based on prior year activities.

7

Performance Management / Continuous Improvement

Did the agency implement a performance management system with outcome-focused goals and aligned program objectives and measures, and did it frequently collect, analyze, and use data and evidence to improve outcomes, return on investment, and other dimensions of performance in FY22?

4.1 Did the agency have a strategic plan with outcome goals, program objectives (if different), outcome measures, and program measures (if different)?

- AmeriCorps’ FY22-26 strategic plan (goals and objectives) was approved by OMB and includes performance indicators. The agency has developed an internal-facing implementation tracker to facilitate the assessment of progress toward its goals and objectives.

4.2 Does the agency use data/evidence to improve outcomes and return on investment?

- The agency continued to invest in targeted return on investment analyses in FY22. An overview of findings from eight completed analyses is publicly available. The FY22 investment in assessing agency performance totaled $438,657.44.

- The agency’s chief risk officer also conducted regular risk assessments to ensure that proper internal controls were in place. Its Risk Management Council continued to meet regularly to review these data captures and adjust processes and practices accordingly to improve organizational outcomes.

4.3 Did the agency have continuous improvement or learning cycle processes to identify promising practices, problem areas, possible causal factors, and opportunities for improvement (examples: stat meetings, data analytics, data visualization tools, or other tools that improve performance)?

- To support AmeriCorps’ continuous improvement cycles, the agency is leveraging two technology tools: an internal-facing tracker for agency priorities and a strategic plan dashboard for agency data needs. These two complementary tools are designed for consistent agency-wide reporting on progress toward goals/objectives.

6

Data

Did the agency collect, analyze, share, and use high-quality administrative and survey data consistent with strong privacy protections to improve (or help other entities improve) outcomes, cost effectiveness, and/or the performance of federal, state, local, and other service provider programs in FY22?

5.1 Did the agency have a strategic data plan, including an open data policy (example: Evidence Act 202(c), Strategic Information Resources Plan)?

- In FY22 the agency’s chief data officer developed and implemented an organizational structure to support the maturation of enterprise data management at AmeriCorps. The data stewardship framework is a formal organizational structure that assigns documented roles and responsibilities for enterprise data to the appropriate individuals within the enterprise. Through these roles and responsibilities, individuals and the organizations to which they belong are empowered as stewards, not owners, of the data assets. In this role, they manage various aspects of the data assets in the best interest of the enterprise. By promoting accountability for data as an enterprise asset and providing for effective collaboration among the necessary stakeholders, data stewardship fosters an environment ensuring optimal performance. AmeriCorps’ data stewardship framework includes three governance tiers: a Strategic Advisory Board, a Data Governance Council, and data practitioners. Each governance tier has a charter that defines its purpose, authority, scope, functions, and membership. These governance tiers execute the agency’s data strategic goals and support the agency’s data management policy (Policy 383, currently under revision).

- The Data Governance Council is supported by collaborative working groups organized around a major category of enterprise data or a capability of data management. Three collaborative working groups were created in FY22 to support management of the agency’s diversity data, the agency’s strategic plan performance measures, and the agency’s grant management technology modernization efforts. (Note that AmeriCorps’ Technology Modernization Fund proposal for $14,000,000 was approved by the Federal Technology Modernization Board in FY22.)

5.2 Did the agency have an updated comprehensive data inventory (example: Evidence Act 3511)?

- The agency’s Information Technology Data Management Policy addresses the need to have a current and comprehensive data inventory. The agency has an open data platform. The chief data officer procured updated modules and technical support for this platform in FY22 to better support and advance the agency’s data management capacities.

5.3 Did the agency promote data access or data linkage for evaluation, evidence building, or program improvement [examples: model data-sharing or data-licensing agreements; data tagging and documentation; data standardization; downloadable machine-readable, de-identified tagged data; Evidence Act 3520(c)]?

- AmeriCorps has a data request form and an MOU template so that anyone interested in accessing agency data may use the protocol to request data. In addition, public data sets are accessible through the agency’s open data platform. The agency’s member exit survey data were made publicly available for the first time in FY19. In addition, nationally representative civic engagement and volunteering statistics are available on an interactive platform through a data sharing agreement with the U.S. Census Bureau. The goal of these platforms is to make these data more accessible to all interested end users.

- The portfolio navigator pulls data from the AmeriCorps data warehouse for use by the agency’s portfolio managers and senior portfolio managers. The goal is to use this information for grants management and continuous improvement throughout the grant lifecycle.

5.4 Did the agency have policies and procedures to secure data and protect personal confidential information (example: differential privacy; secure, multiparty computation; homomorphic encryption; or developing audit trails)

- The agency has a privacy policy (Policy 153) that was signed in FY20 and posted internally. The Information Technology Data Governance Policy addresses data security. The agency conducts privacy impact assessments, consisting of a privacy review of each of AmeriCorps’ largest electronic systems, which are then published online.

5.5 Did the agency provide assistance to city, county, and/or state governments, and/or other grantees in accessing the agency’s datasets while protecting privacy?

- AmeriCorps provides assistance to grantees, including governments, to help them access agency data. For example, AmeriCorps provides assistance on using the AmeriCorps Member Exit Survey data to state service commissions (many of which are part of state government) and other grantees as requested. Through briefings integrated into standing calls with these entities. ORE worked with a few state commissions in FY22 to develop a prototype report tailored to each state that contains data from the Current Population Survey Civic Engagement and Volunteering (CPS CEV) Supplement and Member Exit Survey. The goal is to create these reports for state commissions to use for catalyzing data-driven national service and volunteering efforts.

7

Common Evidence Standards / What Works Designations

Did the agency use a common evidence framework, guidelines, or standards to inform its research and funding purposes; did that framework prioritize rigorous research and evaluation methods; and did the agency disseminate and promote the use of evidence-based interventions through a user-friendly tool in FY22 (example: What Works Clearinghouses)?

6.1 Did the agency have a common evidence framework for research and evaluation purposes?

- AmeriCorps uses the same standard scientific research methods and designs for all of its studies and evaluations following the model used by clearinghouses like Department of Education’s What Works Clearinghouse, the Department of Labor’s Clearinghouse for Labor Evaluation and Research, and the Department of Health and Human Services’ Home Visiting Evidence of Effectiveness project.

- AmeriCorps’ evidence building approach utilizes the full range of social science designs and methods. Application of these strategies in the context of all AmeriCorps program models does not neatly align with the three-tiered evidence framework as defined and implemented in the context of the Social Innovation Fund and other federal tiered-evidence initiatives.

6.2 Did the agency have a common evidence framework for funding decisions?

- AmeriCorps has a common evidence framework for funding decisions in the Senior Corps and AmeriCorps state and national programs. This framework, which is articulated in the AmeriCorps State and National program notice of funding, includes the following evidence levels: pre-preliminary, preliminary, moderate, and strong.

- The tiered evidence framework is defined by these research designs and has been embedded into the AmeriCorps state and national (ASN) competitive grant making process for several years. For context, in FY22, funding appropriated by ASN programs amounts to 54% of AmeriCorps’ enacted operating budget. Furthermore, 64% of FY22 ASN competitively awarded funds were invested in interventions with moderate and strong evidence. Investment in education interventions (50% of the ASN portfolio) with moderate (14%) or strong (56%) evidence amounted to 70% of allocated funds in this focus area.

6.3 Did the agency have a clearinghouse(s) or a user-friendly tool that disseminated information on rigorously evaluated, evidence-based solutions (programs, interventions, practices, etc.) including information on what works where, for whom, and under what conditions?

- The AmeriCorps Evidence Exchange is a virtual repository of reports and resources intended to help AmeriCorps grantees and other interested stakeholders find information about evidence- and research-based national service programs. Examples of the types of resources available in the Evidence Exchange include research briefs that describe the core components of effective interventions such as those in the areas of education, economic opportunity, and health.

- The Office of Research & Evaluation also creates campaigns and derivative products to distill complex report findings and increase their utility for practitioners (for example, this brief on a study about the health benefits of Senior Corps). It categorizes reports according to their research design so that users can easily search for experimental, quasi-experimental, or nonexperimental studies and studies that qualify for strong, moderate, or preliminary evidence levels.

- The Office of Research & Evaluation awarded a new, multi-year contract to Mathematica in FY22 to support and advance the agency’s knowledge translation and dissemination goals so that multiple stakeholder groups can access the agency’s body of evidence and more effectively address local community needs.

6.4 Did the agency promote the utilization of evidence-based practices in the field to encourage implementation, replication, and application of evaluation findings and other evidence?

- AmeriCorps launched and promoted its web-based interactive tool for supporting the implementation and replication of evidence-based interventions: Scaling Checklists: Assessing Your Level of Evidence and Readiness (SCALER). This resource was promoted by the Department of Education and AmeriCorps through webinars and in-person trainings. The agency makes a range of resources available to the field to encourage the use of evidence-based practices.

- AmeriCorps maintained its agency-wide approach to promoting the use of evidence-based practices and employed a variety of strategies including evidence briefs, broad-based support to national service organizations, and targeted technical assistance to grantees. First, ORE has created campaigns and derivative products to distill complex report findings and increase their utility for practitioners. Second, AmeriCorps has created user-friendly research briefs that describe the core components of effective interventions in the areas of education, economic opportunity, and health. These briefs are designed to help grantees (and potential grantees) adopt evidence-based approaches. Third, ORE funds a contractor to provide AmeriCorps grantees with evaluation capacity building support; staff are also available to state commissions to address their evaluation questions and ro make resources (e.g., research briefs summarizing effective interventions, online evaluation planning and reporting curricula) available to them and the general public. Fourth, AmeriCorps funds and participates in grantee conferences that include specific sessions on how to incorporate evidence and data into national service programs. Fifth, as part of its State and National FY20 application process, AmeriCorps provided technical assistance to grantees on using evidence-based practices through webinars and calls. AmeriCorps Seniors continues to encourage and support the use of evidence-based programs, as identified by the U.S. Department of Health and Human Services’s (HHS’s) Administration for Community Living. In FY22 AmeriCorps Seniors began exploring and supporting evidence-based service to work models.

5

Innovation

Did the agency have staff, policies, and processes in place that encouraged innovation to improve the impact of its programs in FY22 (examples: prizes and challenges, behavioral science trials, innovation labs/accelerators, performance partnership pilots, demonstration projects or waivers with rigorous evaluation requirements)?

7.1 Did the agency have staff dedicated to leading innovation efforts to improve the impacts of its programs?

- AmeriCorps program offices have used American Rescue Plan Act (ARPA) funding as an opportunity to be innovative and to hire term-appointed staff to implement these innovation efforts. Examples of these efforts include the following:

- ACS Demonstration Authority: ARPA funds will be used to demonstrate and evaluate service to work models for older Americans.

- ACS Research: ACS and ORE will research COVID impacts on ACS programs, volunteers, and service stations. A third party research firm will be awarded funding in FY22.

- ASN Planning Grants: ARPA funds will be used to demonstrate and evaluate strategies for increasing access to agency resources in underserved communities including increasing member stipends and waiving match requirements as well as democratizing access to evidence (we will create use cases with ASN grantees utilizing the Impact Genome’s standardized evidence database).

- AmeriCorps will invest $20,000,000 in nonprofit organizations that seek to pilot a Volunteer Generation Fund in their communities to help address the nation’s learning loss crisis resulting from the pandemic. This investment will be evaluated for its implementation and early outcomes.

- Public Health AmeriCorps is providing $400,000,000 in Centers for Disease Control and Prevention (CDC) and ARP funding dedicated to building a pipeline of public health workers and strengthening public health systems and the capacity to recover from the pandemic. This initiative will be evaluated (a contract will be awarded to a third party research firm in FY22, and the evaluation design is expected to be quasi-experimental). Findings are expected to inform programmatic improvements in the areas of member and volunteer training/certification, creation of sector-specific career pathways, and the role of cross-agency partnerships in building community capacity to address critical health issues.

- AmeriCorps VISTA Food Security Initiative: VISTA implemented a second cohort of grantees as part of its food security initiative. The model is being used to establish formal learning communities among sponsor organizations and cohorts of VISTA volunteers to assess whether or not these program enhancements will result in better outcomes for volunteers, organizations, and communities served. Staff from ORE are conducting an internal assessment of this model to determine the feasibility of conducting a more formal evaluation of the initiative in FY23.

7.2: Did the agency have initiatives to promote innovation to improve the impact of its programs?

- AmeriCorps continued to learn from its National Service and Civic Engagement research grantees, who receive grant funds to engage community residents and leaders in the development of new and innovative national service projects (more information available here). In addition to national service project development, these grants foster civic engagement through community research teams and build community capacity for using research to identify and understand local issues as well as to identify possible solutions. These grantees were given the opportunity to apply for additional funding in FY22 to build on research that is used to innovate and improve national service and volunteer practice and knowledge.

7.3 Did the agency evaluate its innovation efforts, including using rigorous methods?

- AmeriCorps uses the research design most appropriate for addressing the research question. When experimental or quasi-experimental designs are warranted, the agency uses them and encourages its grantees to use them, as noted in the agency evaluation policy: “AmeriCorps is committed to using the most rigorous methods that are appropriate to the evaluation questions and feasible within statutory, budget and other constraints.” As of August 2022, AmeriCorps has received 47 grantee evaluation reports that use experimental design and 144 that use quasi-experimental design.” AmeriCorps has also funded a mixed-methods longitudinal study of NCCC members that includes a matched comparison group. This member development study will conclude in FY23.

13

Use of Evidence in Competitive Grant Programs

Did the agency use evidence of effectiveness when allocating funds from its competitive grant programs in FY22 (examples: tiered-evidence frameworks, evidence-based funding set-asides, priority preference points or other preference scoring for evidence, and pay for success provisions)?

8.1 What were the agency’s five largest competitive programs and their appropriations amount (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, AmeriCorps’ largest competitive grant programs are the following:

- AmeriCorps state and national program (excluding state formula grant funds): $277,694,600; eligible grantees: nonprofit organizations, state governments, tribal governments, local governments, institutions of higher education.

- Senior Corps RSVP program: $53,956,000; eligible grantees: nonprofit organizations, local governments.

8.2 Did the agency use evidence of effectiveness to allocate funds in five largest competitive grant programs (e.g., were evidence-based interventions/practices required or suggested and was evidence a significant requirement)?

- AmeriCorps’ State and National grants program (excluding state formula grant funds) allocated as many as 44 out of 100 points to organizations that submitted applications supported by performance and evaluation data in FY22. Specifically, up to 24 points can be assigned to applications with theories of change supported by relevant research literature, program performance data, or program evaluation data; up to 20 points can be assigned for an applicant’s incoming level of evidence and the quality of the evidence. Further, in 2022 AmeriCorps prioritized the funding of specific education, economic opportunity, and health interventions with moderate or strong levels of evidence.

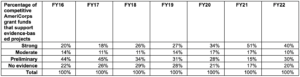

- Since AmeriCorps’ implementation of a scoring process that assigns specific points for level of evidence, the percentage of grant dollars allocated to strong, moderate, preliminary, and no evidence categories has shifted over time (see table below) such that more grant dollars were awarded to applicants with strong and moderate levels of evidence for proposed interventions, and fewer grant dollars were awarded to applicants with little to no evidence of effectiveness.

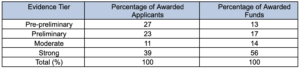

- In the education focus area, exhibit 1 (below) reveals that half (50%) of awarded applicants provided moderate or strong evidence, accounting for 70% of allocated funds in these groups. Across all evidence tiers, the pre-preliminary group, at 27%, was second only to the strong group in terms of percentage of awarded applicants. The pre-preliminary group had the smallest fund allocation equivalent to 13% of awarded funds.

Exhibit 1: Percentage of Awarded Applicants and Awarded Funds by Evidence Tier, ASN Awarded Applicants in Education Focus Area (n = 79)

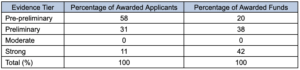

- The exhibit below shows that, in the economic opportunity focus area, a majority of awarded applicants (58%) provided pre-preliminary evidence and received 20% of the total allocated funds. The next highest awarded evidence tier was preliminary at 31%, which received 38% of allocated funds. Eleven percent of applicants provided strong evidence and this tier received the highest allocation of funds at 42%.

Exhibit 2: Percentage of Awarded Applicants and Awarded Funds by Evidence Tier, ASN Awarded Applicants in Economic Opportunity Focus Area (n = 34)

- The AmeriCorps Seniors program staff are discussing the most feasible way to integrate research evidence into the RSVP grant competition. The program director and director of ORE have discussed the idea of requiring RSVP grantees to use best practices for volunteer management. In addition, staff have discussed ways to inform station-level volunteer management research on managing multiple life roles.

- Given the organizational capacity of many of the RSVP grantees and the size of the grants AmeriCorps awards to these organizations, supporting the integration of research-based practice as part of the grant’s performance expectations and reporting seems like the most reasonable approach to evidence-based grant making for this program. If AmeriCorps Seniors pursues this strategy, the program will provide technical assistance to the RSVP grantees and provide a possible pathway to certification [e.g., Council for Certification in Volunteer Administration (CCVA)]. Embedding evidence-based practices into organizational operations may also ensure that AmeriCorps resources remain accessible to communities and community-based organizations most in need of national service.

8.3 Did the agency use its five largest competitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- AmeriCorps state and national grantees are required to evaluate their programs as part of the grant’s terms and conditions. Grantees receiving more than $500,000 are required to conduct an independent external evaluation (see p. 27 of the FY22 notice of funding for a description of these requirements). AmeriCorps program models vary. The AmeriCorps state and national program model relies on competitive and formula grants that are used by organizations to sponsor national service corps members. These grants are managed by state commissions. Members support projects (or program interventions) delivered by their sponsor organizations; these projects are designed to improve educational, employment, health, and environmental outcomes in their communities. Projects (or program interventions/models) are well-defined with respect to their target populations, their core activities/components including dosage and modalities, and their intended outcomes. Program participation is also typically well-defined and occurs within a specified period of time with clear beginning and end dates. As such, these models are highly amenable to program evaluations that rely on research designs capable of causal attributions.

8.4 Did the agency use evidence of effectiveness to allocate funds in any other competitive grant program (besides its five largest grant programs)?

- AmeriCorps administers only two competitive grant programs, described above.

8.5 What are the agency’s 1-2 strongest examples of how competitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- AmeriCorps has summarized the accomplishments of its competitive grant programs in a series of research briefs that describe the core components of effective interventions in the areas of education, economic opportunity, environmental stewardship, and health. The education brief was used to justify the FY19 funding priority for evidence-based interventions in the AmeriCorps state and national competition. All interventions described in these briefs illustrate how AmeriCorps’ competitive grant recipients have achieved better outcomes and built knowledge about what works. The agency released additional return-on-investment studies in FY22, all of which had positive findings.

8.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity building efforts?

- AmeriCorps’ state and national grantees, including city, county, tribal, and state governments, are required to use their AmeriCorps funds to evaluate their programs. In FY22, AmeriCorps awarded $ 8.5 million for the 2022 Terms and Conditions for Training and Technical Assistance Commission Investment Fund Grants that support state commissions, which are typically housed within state government. Approximately one-third of these grants will focus on building the capacity of state commissions and their grantees to collect and use performance and evaluation data.

- AmeriCorps’ Evidence Exchange includes a suite of scaling products to help grantees replicate evidence-based interventions.

3

Use of Evidence in Noncompetitive Grant Programs

Did the agency use evidence of effectiveness when allocating funds from its noncompetitive grant programs in FY22 (examples: evidence-based funding set-asides, requirements to invest funds in evidence-based activities, and pay for success provisions)?

9.1 What were the agency’s five largest noncompetitive programs and their appropriation amounts (and were city, county, and/or state governments eligible to receive funds from these programs)?

- In FY22, the five largest noncompetitive grant programs are:

- AmeriCorps state formula grants program: $156,000,000; eligible grantees: states;

- AmeriCorps NCCC: $34,500,000; eligible grantees: nonprofit organizations;

- AmeriCorps VISTA: $100,000,000; eligible grantees: nonprofit organizations, state, tribal, and local governments, institutions of higher education;

- Senior Corps Foster Grandparents: $122,000,000; eligible grantees: nonprofit organization, local governments;

- Senior Corps Senior Companion Program: $54,000,000; eligible grantees: nonprofit organizations, local governments.

9.2 Did the agency use evidence of effectiveness to allocate funds in the largest five noncompetitive grant programs (e.g., are evidence-based interventions/practices required or suggested and is evidence a significant requirement)?

- The AmeriCorps VISTA, NCCC and Seniors programs (Foster Grandparents, Senior Companions, and RSVP) are distinguished from the ASN model in three important ways. First, with the exception of RSVP (6% of the agency’s FY22 enacted operating budget), these are not competitive grant programs as defined in their authorizing legislation. Secondly, these programs have as a primary focus the member and volunteer experiences and outcomes. Third, the AmeriCorps VISTA and NCCC programs are more directly managed by AmeriCorps staff.

- Because the programmatic emphasis of these AmeriCorps programs is human development across the lifespan, with participation in national service as the intervention for influencing positive developmental outcomes for members and volunteers across the life span, AmeriCorps’s approach to building evidence for these programs is different from a tiered evidence framework. The AmeriCorps ORE has a robust research agenda for building evidence for these programs. Broadly speaking, the research strategy relies primarily on survey research conducted by the U.S. Census, AmeriCorps, or other organizations (e.g., the Urban Institute). This approach relies on research designs that capture data over time, mapped to time participating in national service and follow-up periods. When feasible, AmeriCorps evaluations have used comparison groups of similar volunteers to determine the relative influence of AmeriCorps versus volunteering in general.

9.3 Did the agency use its five largest noncompetitive grant programs to build evidence? (e.g., requiring grantees to participate in evaluations)

- Examples of studies that have contributed to the country’s and the agency’s body of evidence for national service and volunteering include the following:

- U.S. Current Population Survey Civic Engagement and Volunteering Supplement: Americorps sponsors this nationally representative survey biennially;

- Member Exit Survey: AmeriCorps State and National, VISTA, and NCCC members complete this survey when they exit their national service program; measures civic engagement, bridging differences, Getting Things Done and life and career skills pathways;

- Alumni Outcome Survey Studies: Measure educational, employment, and civic engagement outcomes over time;

- NCCC Leadership Longitudinal Survey: Administered one year following service and includes a comparison group of those who apply but do not enroll and those who leave the program early (AmeriCorps will revise this survey in FY23-24 to include ACS volunteers and create a pre/post administration cadence);

- Senior Corps Longitudinal Survey: Administered at one-year follow-up for new volunteers and a comparison group of similar volunteers (AmeriCorps Seniors was formally Senior Corps);

- AmeriCorps National Service and Civic Engagement Research Grant Program (see Snapshot of AmeriCorps’ research grantee activities | AmeriCorps): sponsored research studies to build evidence about civic engagement outcomes. Two examples to illustrate:

- “Impacts of Adolescent and Young Adult Civic Engagement on Health and Socioeconomic Status in Adulthood” by P. J.Ballard, L. T. Hoyt, and Mark C. Pachucki [Child Development, 90(4), 2019]

- “Can Government Intervention Increase Volunteers and Donations? Analyzing the Influence of VISTA With a Matched Design” by A. Messamore, P. Paxton, and K. Velasco [Administration & Society, 53(10), 2021];

- Targeted Return on Investment Analysis. An empirical study demonstrating the monetary value of national service programs for members and volunteers (see Improving Lives, Strengthening Communities, and Fostering Civic Engagement Through Service (americorps.gov) for more information on evidence.

9.4 Did the agency use evidence of effectiveness to allocate funds in any other non-competitive grant programs (besides its five largest grant programs)?

- AmeriCorps administers only five noncompetitive grant programs, as described above.

9.5 What are the agency’s 1-2 strongest examples of how noncompetitive grant recipients achieved better outcomes and/or built knowledge of what works or what does not?

- In 2022, AmeriCorps’ ORE hosted a webinar entitled “Creating Successful Volunteering and National Service Opportunities: Best Practices and Benefit” that included a conversation with 2017 AmeriCorps National Service and Civic Engagement Research Grant recipients on lessons learned with regard to recruiting and supporting diverse members and preparing them for success after their year of service.

9.6 Did the agency provide guidance that makes clear that city, county, and state government, and/or other grantees can or should use the funds they receive from these programs to conduct program evaluations and/or to strengthen their evaluation capacity-building efforts?

- AmeriCorps does not prohibit the use of formula dollars for evaluation but each state commission may have its own guidelines. Further, formula grantees awarded more than $500,000 must perform evaluations using their grant funds.

6

Repurpose for Results

In FY22, did the agency shift funds away from or within any practice, policy, or program that consistently failed to achieve desired outcomes (examples: requiring low-performing grantees to re-compete for funding; removing ineffective interventions from allowable use of grant funds; incentivizing or urging grant applicants to stop using ineffective practices in funding announcements; proposing the elimination of ineffective programs through annual budget requests; incentivizing well-designed trials to fill specific knowledge gaps; supporting low-performing grantees through mentoring, improvement plans, and other forms of assistance; using rigorous evaluation results to shift funds away from a program)

10.1 Did the agency have policy(ies) for determining when to shift funds away from grantees, practices, policies, interventions, and/or programs that consistently failed to achieve desired outcomes, and did the agency act on that policy?

- AmeriCorps’s State and National denied funding to ten FY22 applicants that requested $3,786,174 for new or recompete funding because they did not demonstrate evidence for the proposed program. These funds were reallocated and invested in applications with a demonstrated evidence base.

10.2 Did the agency identify and provide support to agency programs or grantees that failed to achieve desired outcomes?

- AmeriCorps portfolio managers continued to use a grant management tool (the Portfolio Navigator) that allows them to access data about grantee organizations in real time to facilitate improved oversight and support.

- AmeriCorps’ ORE continued to invest $918,169 in evaluation technical assistance support for grantees. This funding is available to all competitive AmeriCorps state and national grantees seeking to improve their ability to empirically demonstrate their effectiveness. This support was expanded to AmeriCorps Seniors and VISTA grantees in FY22.